Article

All Article

-

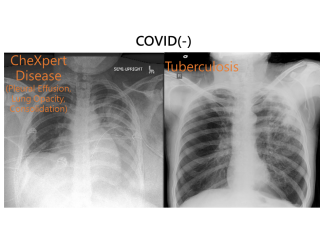

Artificial Intelligence Application——The 5 Most Promising Industries for AIArtificial Intelligence Application——The 5 Most Promising Industries for AIWith the entry of AI into human life, the face of various industries has changed. Among them, the medical industry is particularly outstanding. The MAIA platform developed by the Muen team is the world's first automated AI platform developed specifically for the medical field.

Artificial Intelligence Application——The 5 Most Promising Industries for AIArtificial Intelligence Application——The 5 Most Promising Industries for AIWith the entry of AI into human life, the face of various industries has changed. Among them, the medical industry is particularly outstanding. The MAIA platform developed by the Muen team is the world's first automated AI platform developed specifically for the medical field. -

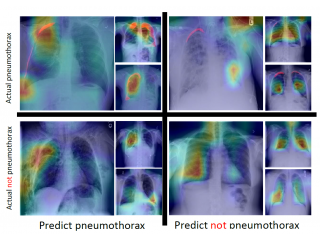

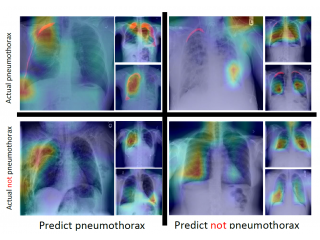

No longer a Black Box––AI Makes Sense, too! –– Discuss Explainable AINo longer a Black Box––AI Makes Sense, too! –– Discuss Explainable AIDeep learning can solve many problems and be used in many tasks, such as image classification, target detection, image segmentation...etc. What does the DL model learn during training? What does it see in the images to base its judgment on? Explainable AI is the answer to such questions.

No longer a Black Box––AI Makes Sense, too! –– Discuss Explainable AINo longer a Black Box––AI Makes Sense, too! –– Discuss Explainable AIDeep learning can solve many problems and be used in many tasks, such as image classification, target detection, image segmentation...etc. What does the DL model learn during training? What does it see in the images to base its judgment on? Explainable AI is the answer to such questions. -

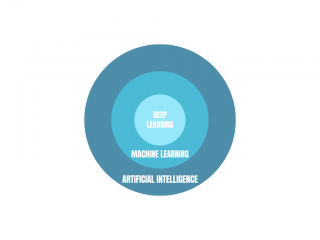

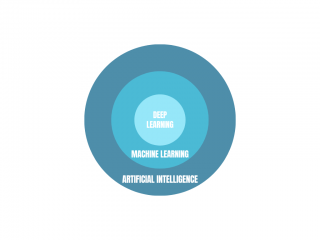

How can AI Behave Like Humans? Discuss "Machine Learning" and "Deep Learning"How can AI Behave Like Humans? Discuss "Machine Learning" and "Deep Learning"AI technology can be divided into ML and DL. ML uses large amounts of data and algorithms to find changing patterns and summarize features. DL is based on a neural-like network; its applications include AlphaGo, autonomous driving technology, and have been integrated with medical services.

How can AI Behave Like Humans? Discuss "Machine Learning" and "Deep Learning"How can AI Behave Like Humans? Discuss "Machine Learning" and "Deep Learning"AI technology can be divided into ML and DL. ML uses large amounts of data and algorithms to find changing patterns and summarize features. DL is based on a neural-like network; its applications include AlphaGo, autonomous driving technology, and have been integrated with medical services. -

Medical AI Becomes Mainstream, Muen & CloudRiches Create New Digital TransformationMedical AI Becomes Mainstream, Muen & CloudRiches Create New Digital TransformationMuen and CloudRiches combine our technologies to assist medical users with optimizing the research process and making good use of cloud computing and data-sharing. In the future, we will help various sectors create platforms and solutions, building successful business models.

Medical AI Becomes Mainstream, Muen & CloudRiches Create New Digital TransformationMedical AI Becomes Mainstream, Muen & CloudRiches Create New Digital TransformationMuen and CloudRiches combine our technologies to assist medical users with optimizing the research process and making good use of cloud computing and data-sharing. In the future, we will help various sectors create platforms and solutions, building successful business models.