Written by: Doris Kao

Deep learning can help us solve many problems and be used in many tasks, such as image classification, target detection, image segmentation, etc. Many people are curious about what DL models learn from the training process. What do they see in the images to base their judgments on in image tasks?

"Explainable AI (XAI)" is the answer to such questions; these methods include CAM, Grad-CAM and Grad-CAM++. Here we would like to introduce Grad-CAM, published in the IEEE journal in 2017.

Methods

Grad-CAM, namely Gradient-weighted Class Activation Mapping, is introduced in the paper as follows: "For a decision value of interest, Grad-CAM uses the gradient information returned to the last convolutional layer in the CNN model to determine the importance of each neuron." For each input image, Grad-CAM generates a heat map indicating the areas considered important by the model. Grad-CAM can be widely applied to different CNN models without changing the original model architecture or retraining the model.

.png)

Examples

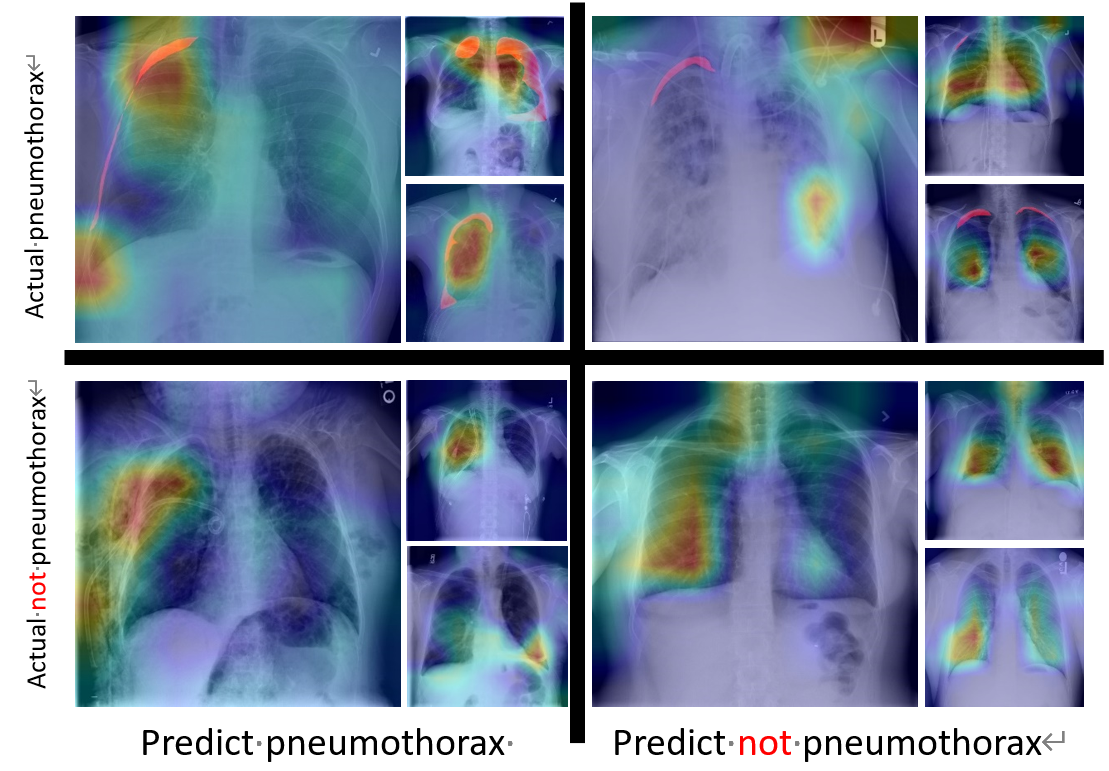

We use X-ray pneumothorax detection as an example (the data is sourced from the Kaggle contest): SIIM-ACR Pneumothorax Segmentation. The following results are superposition images of X-ray and the heat map generated by Grad-CAM, and the segmentation provided by the contest is marked red, which can be classified into 4 cases:

1. Pneumothorax is predicted and actual (True Positive)

2. Pneumothorax is predicted and not actual (False Positive)

3. Pneumothorax is not predicted and actual (False Negative)

4. Pneumothorax is not predicted and not actual (True Negative)

In the first case, there is an interesting finding: the model does not only look at the area with pneumothorax, but also looks at the X-ray chest tube image when determining whether there is a pneumothorax or not.

Discussion

In the past, the Stanford Machine Learning Group provided CheXpert data for pneumothorax detection, and labeled them as 15 chest disease categories. Andrew Ng's team also modeled this dataset, but there was no significant difference compared to the radiologist's interpretation. This may be due to the fact that there are occasionally chest tubes placed by the physicians into the patient's body; the features of those chest tubes are so obvious that AI may learn from them.

Now AI is not a black box anymore, since Grad-CAM provides explainability for us to make sense of features learned by AI. In addition, turning classification into segmentation enables AI to focus on the lesions, and can also avoid unexplainable AI.